Advertisement

Artificial intelligence can write, summarize, chat, translate, and even make decisions—but it doesn’t always stay on track. That’s where Guardrails AI comes in. It doesn’t limit what AI can do; it keeps it from crossing lines it shouldn’t. Like a GPS that helps you avoid wrong turns, guardrails guide AI to stay within safe and intended boundaries. It’s not a single tool—it’s a growing concept built into many systems, making sure AI behaves reliably as its use expands.

Let's begin with something basic: AI doesn't really "get" things like people do. It's proficient at patterns, predictions, and doing what it's done before. But it doesn't understand what's safe, legal, or offensive—unless it's instructed otherwise. That's why guardrails exist primarily. They're there to prevent behavior that goes too far or fails to meet expectations.

There have already been sufficient examples to demonstrate what occurs when there are no guardrails for AI. Chatbots are going off-script, AI assistants are being used to distribute private information, and tools are producing content that's obviously unacceptable. Without the guardrails in place, there's no motivation for the AI not to continue making those types of errors. It's just acting on instruction.

Guardrails aren't only about protecting people from bad content. They're also about protecting companies and creators. Suppose a product powered by AI starts producing harmful output that can damage reputation and trust. In some cases, it can even lead to lawsuits. So yes, guardrails are about safety—but they're also about responsibility.

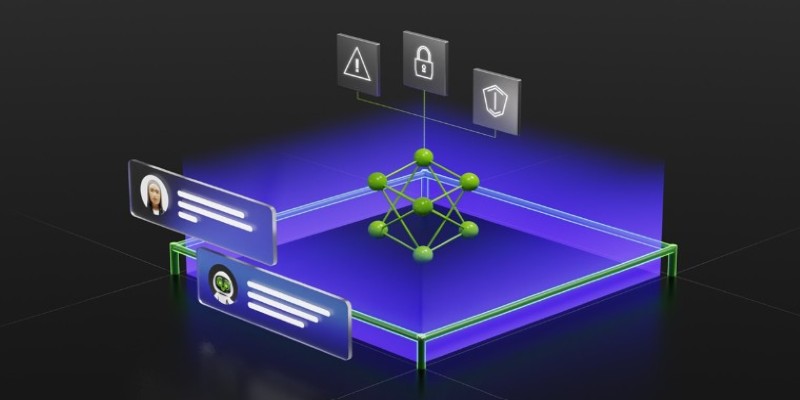

There’s no single way to build guardrails into AI, but most systems use a mix of the following:

These are the basic filters that block words, topics, or behaviors that have been marked as off-limits. For instance, if a chatbot is designed for kids, a rule-based filter might block certain phrases or prevent the AI from responding to sensitive questions. It's a bit like a web filter on a school computer—specific, straightforward, and usually easy to update.

This approach goes deeper. Rather than just checking for banned words, moderation models look at the whole message and decide if it's safe or appropriate. It's more like a second AI that watches the first one. These models are trained on large sets of examples—some good, some not so good—to help them spot content that violates guidelines.

AI often works by responding to a prompt. The way you phrase that prompt can shape how the AI behaves. So, another type of guardrail is built into the prompt itself. If you want the AI to avoid giving financial advice, you might start the prompt with, "You are not a financial advisor, and you do not give investment advice." It sets the tone and gives a soft boundary.

This happens after the AI has already generated a response. The system checks the answer and decides if it should be sent out as is, edited, or rejected entirely. This step can be automated or handled by a human, depending on how sensitive the content is.

In some cases, the AI is trained on data that already includes these guardrails. That means instead of blocking behavior after the fact, it learns not to do those things in the first place. But this takes a lot of work—picking the right training data, reviewing it carefully, and updating it regularly. Still, when it works, it leads to more natural and consistent responses.

Guardrails aren’t just a future idea—they’re already part of the systems many people use daily. Here are a few places where they’ve quietly become essential:

Many companies use AI to help handle customer service. These tools have to stay polite, professional, and accurate—no matter what the user says. Guardrails make sure the AI doesn't get sarcastic or rude or start guessing when it doesn't know the answer.

AI tools that support doctors or lawyers need to be extra careful. A wrong answer here isn't just confusing—it could be dangerous. So, the systems often have built-in rules that stop them from giving direct advice or making diagnoses. Instead, they point users to real experts or approved sources.

In learning apps, AI is being used to explain concepts, check writing, and tutor students. But there’s a fine line between helping and cheating. Guardrails help the AI stay in its lane—supporting learning without doing the work for the student.

Some AI platforms help people write blogs, captions, scripts, or product descriptions. However, they still need to avoid plagiarism, avoid offensive topics, and follow brand guidelines. That's where prompt design and post-checks come in.

If you’re building an AI tool and want it to behave a certain way, you’ll need clear boundaries. Start by listing what the AI should never do—like sharing private data, offering medical advice, or generating offensive content. Be specific. Then, choose how to enforce those limits. Simple tools might use basic word filters, while more advanced systems may need moderation models, prompt controls, and output checks.

Once set up, test it hard. Feed it edge cases and see where it fails. Tweak as needed. Keep things current. As language and user behavior shift, your guardrails should, too. Set regular reviews. In sensitive areas, consider adding a human reviewer. This extra layer helps manage tricky cases the AI might not handle well on its own.

Guardrails AI is about more than just setting limits. It’s about building AI that behaves well in the real world. Systems that don't just work but work responsibly. Whether you’re a developer, a business owner, or just someone curious about how AI stays in check, understanding guardrails is a big part of the puzzle. They’re not a restriction—they’re a way to build trust and make sure the tech works for everyone.

Advertisement

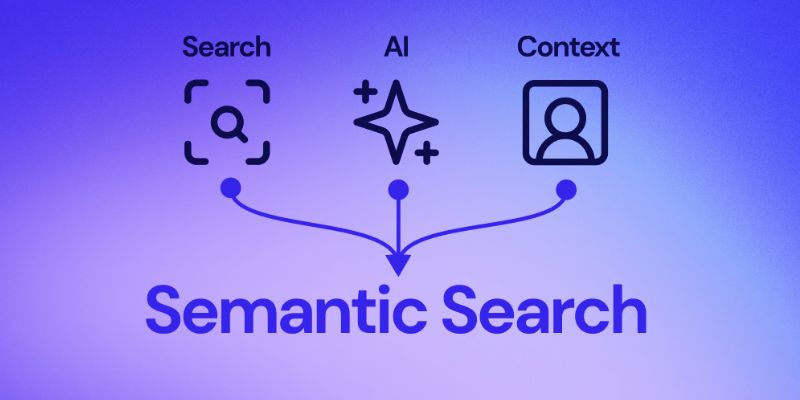

Understand here how embedding models power semantic search by turning text into vectors to match meaning, not just keywords

Follow these essential steps to build a clean AI data set using Getty Images for effective and accurate machine learning models

Improve machine learning models with prompt programming. Enhance accuracy, streamline tasks, and solve complex problems across domains using structured guidance and automation.

Curious how Tableau actually uses AI to make data work better for you? This article breaks down practical features that save time, spot trends, and simplify decisions—without overcomplicating anything

How to supercharge your AI innovation with the cloud. Learn how cloud platforms enable faster AI development, cost control, and seamless collaboration for smarter solutions

Looking for a desk companion that adds charm without being distracting? Looi is a small, cute robot designed to interact, react, and help you stay focused. Learn how it works

Discover how generative AI for the artist has evolved, transforming creativity, expression, and the entire artistic journey

Think picking the right algorithm is enough? Learn how tuning hyperparameters unlocks faster, stronger, and more accurate machine learning models

Learn how to make your custom Python objects behave like built-in types with operator overloading. Master the essential methods for +, -, ==, and more in Python

Learn simple steps to prepare and organize your data for AI development success.

What happens when AI goes off track? Learn how Guardrails AI ensures that artificial intelligence behaves safely, responsibly, and within boundaries in real-world applications

Build scalable AI models with the Couchbase AI technology platform. Enterprise AI development solutions for real-time insights