Advertisement

When training machine learning models, people often think the most important part is choosing the right algorithm. While that matters, the truth is that you can have a great algorithm and still end up with a model that performs poorly if the hyperparameters aren't tuned properly. Hyperparameters are like the settings that tell your model how to learn. If you don't set them right, you could be cutting your model’s potential short without even realizing it. The good news? With some careful tweaking, you can unlock much better results. Let’s get into it.

Hyperparameters control the behavior of a learning algorithm. Unlike model parameters, which the model learns on its own during training (like weights in a neural network), hyperparameters have to be set manually before the training process starts. Think of things like learning rate, number of trees in a random forest, number of layers in a neural network, batch size, and regularization strength.

Choosing poor hyperparameters can make your model slow, inaccurate, or unable to generalize to new data. On the flip side, finding the right combination can mean a big boost in accuracy, faster convergence, and better overall stability. That’s why hyperparameter optimization isn’t just a technical step — it’s one of the most impactful things you can do for your model's success.

When it comes to finding the right hyperparameters, there’s more than one way to approach it. Some methods are basic, while others are more advanced. Let's look at a few of the most common ones:

This is probably the first method you’ll hear about. Grid search involves creating a grid of possible hyperparameter values and systematically trying out every combination. If you're tuning two hyperparameters, it’s like drawing a table where you try out every cell. It's simple and often effective when you don't have too many options, but it can get slow and expensive really fast, especially as the number of hyperparameters grows.

One catch: grid search doesn’t get smarter over time. It doesn’t learn from past tries — it just plows through every possibility, whether it's promising or not.

Now, a random search shakes things up. Instead of testing every single combination, it selects random combinations. Surprisingly, research has shown that random search often finds better models faster than grid search, especially when only a few hyperparameters really matter, and the others aren't as sensitive.

You might feel weird trusting randomness, but with limited time and resources, random search usually covers more useful ground than grid search.

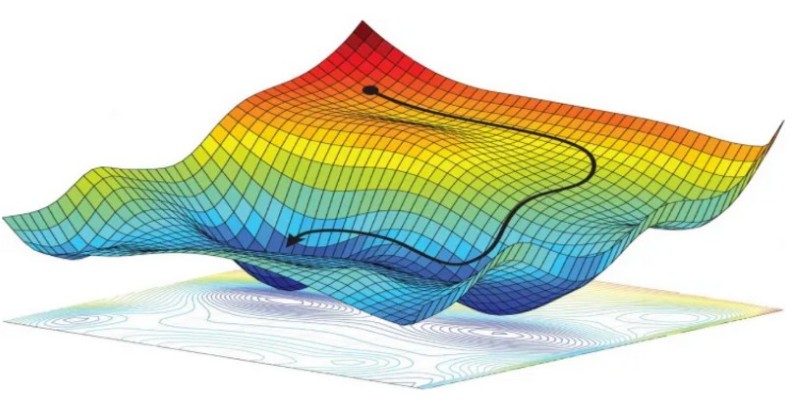

Bayesian optimization adds some real strategy to the process. It builds a model of how your hyperparameters impact your results and uses that model to decide what settings to try next. It’s like taking a guess — but an educated one — about what settings will work better.

Over time, Bayesian optimization balances trying new areas (exploration) and focusing more on promising areas (exploitation). This method often reaches better performance with fewer tries, but setting it up can be a little more technical.

When models take a long time to train, waiting for every model to finish before deciding what to do next is painful. Hyperband and successive halving are smart methods that cut weak models early before they waste too much time. They test lots of hyperparameter settings with small budgets at first and only invest more resources in the ones that look good.

Think of it as a talent show for models. If a model shows early signs of doing well, it gets more stage time. If it doesn’t, it’s politely shown the door.

While hyperparameter optimization can make your models way better, it’s not without its headaches. Let's cover a few of them:

As you add more hyperparameters to tune, the number of possible combinations grows incredibly fast. It’s called the "curse of dimensionality." What feels like a reasonable list at first can quickly turn into an ocean of possibilities. Random search and smarter methods like Bayesian optimization help here, but you still have to be thoughtful about how wide and deep you want your search to be.

During optimization, you’re checking model performance on a validation set over and over again. If you’re not careful, you might end up tuning your model too specifically to that validation set — making it perform worse on truly new data. One way to reduce this risk is to use nested cross-validation, but it takes more time.

Training one model can be heavy. Training hundreds or thousands during hyperparameter tuning? It can get overwhelming. Methods like Hyperband help reduce wasted effort, and using faster proxies (like fewer training epochs early on) can give you a rough idea before committing to full training.

Sometimes, the performance you measure isn't stable — it can vary based on random seeds, sampling, or even minor changes. When your metric is noisy, it's harder to know if a hyperparameter setting is genuinely better or just got lucky. One trick is to repeat each evaluation several times and average the results, but that adds more computational cost.

Want to get better results without draining all your time and computing resources? Here are some quick tips:

Prioritize Important Hyperparameters First: Not every setting matters equally. Focus on the ones that are most likely to make a big difference.

Use Reasonable Ranges: Setting insanely wide ranges wastes time. Base your ranges on common sense and literature, if possible.

Start with Coarse Search: Try bigger steps at first to find rough areas that work well. Then zoom in later with finer searches.

Leverage Early Stopping: If a model looks bad early in training, stop it early instead of letting it slog through to the end.

Parallelize If You Can: Many optimization methods can try different settings at the same time. If you have the hardware for it, take advantage.

Hyperparameter optimization might sound like a small technical detail, but it plays a huge role in how well your machine learning models turn out. Whether you go with grid search, random search, Bayesian optimization, or smarter resource allocation methods like Hyperband, putting effort into tuning pays off. A well-tuned model is faster, more accurate, and more reliable — and skipping this step often leaves results on the table.

If you’re serious about building models that perform the best they can, don’t treat hyperparameter optimization as an afterthought. Treat it as part of your model design from the start, and you’ll be setting yourself up for much stronger results.

Advertisement

Discover how generative AI for the artist has evolved, transforming creativity, expression, and the entire artistic journey

Work doesn’t have to be a grind. Discover how CrewAI and Groq help you design agentic workflows that think, adapt, and deliver—freeing you up for bigger wins

Wondering how databases stay connected and make sense? Learn how foreign keys link tables together, protect data, and keep everything organized

Improve machine learning models with prompt programming. Enhance accuracy, streamline tasks, and solve complex problems across domains using structured guidance and automation.

Need to round numbers to two decimals in Python but not sure which method to use? Here's a clear look at 9 different ways, each suited for different needs

Looking for a solid text-to-speech engine without the price tag? Here are 10 open-source TTS tools that actually work—and one easy guide to get you started

How an AI assistant is transforming last-mile deliveries by improving efficiency, reducing costs, and enhancing customer satisfaction through smarter routing and coordination

Want to run LLaMA 3 on your own machine? Learn how to set it up locally, from hardware requirements to using frameworks like Hugging Face or llama.cpp

An exploration of Cerebras' advancements in AI hardware, its potential impact on the industry, and how it challenges established competitors like Nvidia.

MIT is leading a focused initiative to integrate AI and emerging technologies into manufacturing, prioritizing real-world impact for manufacturers of all sizes

How can machines better understand videos? Explore how X-CLIP integrates video and language to offer smarter video recognition, action recognition, and text-to-video search

Need to merge results from different tables? See how SQL UNION lets you stack similar datasets together easily without losing important details